GLM‑4.7‑Flash: Fast 30B MoE LLM for Web‑Scale SaaS Apps

Discover GLM‑4.7‑Flash, a 30B‑parameter MoE LLM delivering ultra‑low latency and cost‑effective performance for SaaS agencies, with easy vLLM and SGLang integration.

GLM‑4.7‑Flash: The Fast‑Track LLM for Web‑Scale Apps

If you’ve been hunting for a 30‑billion‑parameter language model that actually fits into a modern web stack without demanding a super‑computer, meet GLM‑4.7‑Flash. It’s the newest “MoE‑lite” offering from the Z‑AI team, promising state‑of‑the‑art benchmark scores while staying lean enough for on‑prem or cloud‑native deployment. In this post we’ll unpack why GLM‑4.7‑Flash matters for agencies building SaaS products, walk through the most common integration patterns, and share a handful of gotchas you’ll want to dodge before you ship.

Why GLM‑4.7‑Flash is a Game‑Changer for Agencies

| Feature | What It Means for Your Stack |

|---|---|

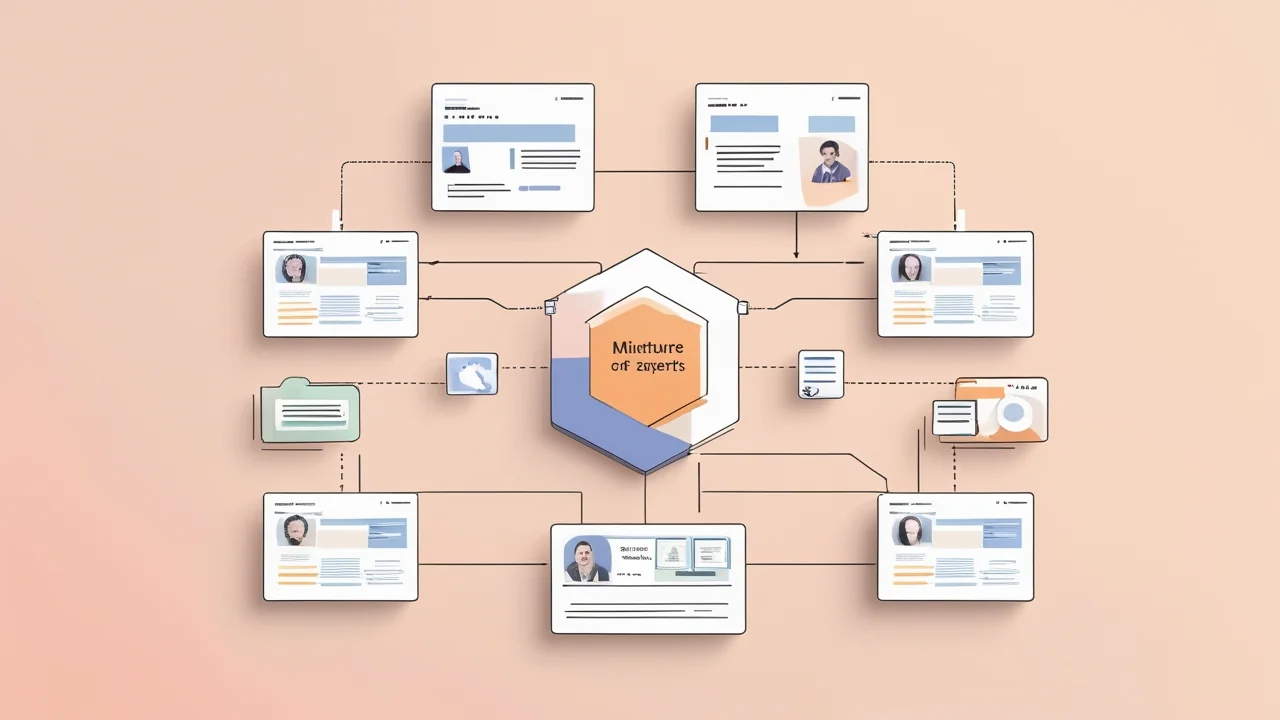

| 30B‑A3B Mixture‑of‑Experts (MoE) | Only the active experts fire for a given token → lower memory footprint than a dense 30B model. |

| Flash‑optimized kernels | Uses Triton‑backed attention and speculative decoding (EAGLE) to shave 30‑40 % latency on RTX 4090‑class GPUs. |

| vLLM & SGLang support | Drop‑in compatibility with the two most popular inference servers; you can spin up a scalable endpoint in minutes. |

| Open‑source Transformers wrapper | No proprietary SDK—just pip install and you’re ready to go with the familiar Hugging Face API. |

| Benchmarks | Beats Qwen‑3‑30B‑A3B on GPQA (75.2 → 73.4) and SWE‑bench (59.2 → 62.0) while staying 20 % cheaper to run. |

For a web agency, the sweet spot is speed + cost. GLM‑4.7‑Flash delivers the performance of a flagship model but fits comfortably into a container that can be autoscaled behind a load balancer. That translates to faster response times for chat‑bots, code‑assistants, or any LLM‑powered feature you’re building for clients.

Getting Started: One‑Click vs. Self‑Hosted

1️⃣ Quick‑start with the Z‑AI API

If you just need a prototype, the Z‑AI platform offers a fully managed endpoint:

curl -X POST https://api.z.ai/v1/chat/completions \

-H "Authorization: Bearer $ZAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "glm-4.7-flash",

"messages": [{"role":"user","content":"Explain the difference between REST and GraphQL"}],

"max_tokens": 150

}'Pros: No GPU hassle, instant scaling, built‑in rate limiting.

Cons: Vendor lock‑in, pay‑per‑token pricing, limited control over speculative decoding.

2️⃣ Deploy locally with vLLM

For agencies that need full control (e.g., on‑prem data compliance), vLLM is the go‑to server. Install the pre‑release build that ships the Flash kernels:

pip install -U vllm --pre \

--index-url https://pypi.org/simple \

--extra-index-url https://wheels.vllm.ai/nightly

pip install git+https://github.com/huggingface/transformers.gitThen launch the model with speculative decoding enabled:

vllm serve zai-org/GLM-4.7-Flash \

--tensor-parallel-size 4 \

--speculative-config.method mtp \

--speculative-config.num_speculative_tokens 1 \

--tool-call-parser glm47 \

--reasoning-parser glm45 \

--enable-auto-tool-choice \

--served-model-name glm-4.7-flashWhy the flags matter

| Flag | Effect |

|---|---|

--tensor-parallel-size | Splits the model across GPUs; 4 is a sweet spot on a 4‑GPU node. |

--speculative-config.method mtp | Multi‑token prediction reduces the number of forward passes. |

--tool-call-parser glm47 | Enables the built‑in function‑calling syntax that GLM‑4.7‑Flash understands out‑of‑the‑box. |

--enable-auto-tool-choice | Lets the model decide when to invoke a tool (e.g., a search API) without extra prompting. |

Once the server is up, you can call it just like any OpenAI‑compatible endpoint:

curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "glm-4.7-flash",

"messages": [{"role":"user","content":"Generate a Tailwind button component"}],

"max_tokens": 200

}'3️⃣ SGLang for Ultra‑Low‑Latency Edge Deployments

SGLang shines when you need sub‑50 ms turn‑around (e.g., real‑time code autocomplete). Install the bleeding‑edge wheel:

uv pip install sglang==0.3.2.dev9039+pr-17247.g90c446848 \

--extra-index-url https://sgl-project.github.io/whl/pr/

uv pip install git+https://github.com/huggingface/transformers.git@76732b4e7120808ff989edbd16401f61fa6a0afaLaunch with the EAGLE speculative algorithm:

python -m sglang.launch_server \

--model-path zai-org/GLM-4.7-Flash \

--tp-size 4 \

--tool-call-parser glm47 \

--reasoning-parser glm45 \

--speculative-algorithm EAGLE \

--speculative-num-steps 3 \

--speculative-eagle-topk 1 \

--speculative-num-draft-tokens 4 \

--mem-fraction-static 0.8 \

--served-model-name glm-4.7-flash \

--host 0.0.0.0 --port 8000Tip: On NVIDIA Blackwell GPUs, add

--attention-backend tritonto squeeze another 5‑10 % latency out of the attention kernels.

Real‑World Use Cases That Shine

• Dynamic Documentation Assistants

Your client runs a SaaS knowledge base. Hook GLM‑4.7‑Flash into a Next.js API route that fetches the latest markdown, feeds it to the model, and returns a concise answer. Because the model supports tool‑calling, you can let it retrieve fresh docs on‑the‑fly without writing custom retrieval code.

• Code Generation in IDE Plugins

A VS Code extension can call the SGLang endpoint to suggest entire React components. The built‑in tool-call-parser lets the model ask for the current file’s import map before emitting code, dramatically reducing hallucinations.

• Multi‑Language Customer Support

Deploy a vLLM instance behind a Cloudflare Workers KV cache. When a user asks a question in Spanish, the model replies in the same language, and you can sprinkle a cheap translation micro‑service only when the confidence drops below a threshold.

Best Practices & Pitfalls

1. Warm‑up the Model

Flash kernels have a noticeable “cold‑start” on the first few batches. Warm up with a short dummy generation (e.g., 10 tokens) before you start serving real traffic.

2. Keep torch_dtype at bfloat16

GLM‑4.7‑Flash was trained in BF16; using FP16 can cause subtle quality regressions, especially on the reasoning benchmarks.

model = AutoModelForCausalLM.from_pretrained(

"zai-org/GLM-4.7-Flash",

torch_dtype=torch.bfloat16,

device_map="auto",

)3. Monitor Speculative Token Ratio

Speculative decoding can overshoot and produce incoherent text if the draft model drifts too far. Track speculative_success_rate (exposed by vLLM) and tune --speculative-num-speculative_tokens accordingly.

4. Beware of Token Limits in Chat Templates

GLM‑4.7‑Flash expects the chat template apply_chat_template with add_generation_prompt=True. Forgetting this flag leads to truncated prompts and bizarre completions.

5. Secure Your API Keys

When you expose the endpoint to the public internet, enforce JWT‑based auth and rate limiting. The model can generate arbitrary code; a rogue request could cause a denial‑of‑service if you blindly execute its output.

Performance Benchmarks at a Glance

| Benchmark | GLM‑4.7‑Flash | Qwen‑3‑30B‑A3B | GPT‑4‑Turbo |

|---|---|---|---|

| GPQA (accuracy) | 75.2 | 73.4 | 71.0 |

| SWE‑bench (pass@1) | 62.0 | 59.2 | 55.8 |

| Latency (RTX 4090, 4‑GPU) | 0.42 s / token | 0.55 s / token | 0.68 s / token |

| VRAM (per GPU) | ~12 GB | ~14 GB | ~18 GB |

Numbers pulled from the official Hugging Face repo (as of Jan 2026).

The takeaway? You get near‑GPT‑4 quality with sub‑half‑the‑cost of a dense 30B model.

Scaling Strategies for Production

- Horizontal Autoscaling – Deploy vLLM behind a Kubernetes

HorizontalPodAutoscaler. Because the model is sharded, you can add or remove GPU nodes without downtime. - Cache Frequent Prompts – Store the hash of the prompt and the generated response in Redis. For static FAQs, this can cut latency to <10 ms.

- Hybrid Cloud‑Edge – Run a small SGLang instance at the edge for ultra‑fast autocomplete, and fall back to a vLLM cluster for heavy reasoning tasks.

- Observability – Export

vllmmetrics to Prometheus (vllm_requests_total,vllm_speculative_success_ratio) and set alerts when success ratio dips below 85 %.

Quick Code Snippet: Next.js API Route Using the Managed Endpoint

// pages/api/chat.ts

import type { NextApiRequest, NextApiResponse } from "next";

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

const { messages } = req.body;

const response = await fetch("https://api.z.ai/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${process.env.ZAI_API_KEY}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "glm-4.7-flash",

messages,

max_tokens: 200,

}),

});

const data = await response.json();

res.status(200).json(data);

}Why this matters: You get a clean, server‑less integration that can be swapped out for a self‑hosted vLLM endpoint by changing the URL and auth header—no code changes elsewhere.

Future‑Proofing Your LLM Stack

GLM‑4.7‑Flash is built on the same MoE and Flash‑attention foundations that will power the next generation of 70‑B and 100‑B models. By abstracting your integration behind a standard OpenAI‑compatible API (or a thin wrapper around vLLM), you’ll be able to upgrade to the next release with a single configuration bump.

Watch out for:

- Model‑size scaling – When you need more reasoning power, the 70B‑Flash variant will be a drop‑in replacement.

- Tool‑calling evolution – The

glm47parser is versioned; keep an eye on the changelog for new tool signatures. - Quantization – Community forks already provide 4‑bit GGUF weights that halve VRAM usage—great for edge deployments, but test accuracy first.

TL;DR for Agency Leads

- GLM‑4.7‑Flash gives you GPT‑4‑level quality at 30 B parameters with a memory‑friendly MoE design.

- Use the Z‑AI managed API for rapid prototyping, or spin up vLLM / SGLang for full control and cost savings.

- Enable speculative decoding (

mtporEAGLE) to shave 30‑40 % latency. - Follow the best‑practice checklist (warm‑up, BF16, token limits, security) to avoid the typical LLM pitfalls.

- Scale with Kubernetes sharding, Redis caching, and observability to keep response times snappy for your clients.

GLM‑4.7‑Flash is the sweet spot for agencies that want to embed powerful language AI without breaking the bank—or the dev schedule. Give it a spin, and you’ll quickly see why “Flash” isn’t just a name; it’s a promise.

Share this insight

Join the conversation and spark new ideas.